Preparation

The MITRE Corporation is located in the town of McLean, not far from Washington. The second round of the ATT&CK Evaluation that Kaspersky was participating in was based on attacks by the APT29 group. Six months before the test, the experts at MITRE had publicly announced a Call for Contributions, to collect the most complete information possible on techniques used by the group. Since we at Kaspersky are very familiar with this APT actor, we gathered together our threat intelligence about their methods and sent it to MITRE. We assume that other vendors did the same. Based on all that data and their own research, MITRE created a simulation of the attacks carried out by APT29.

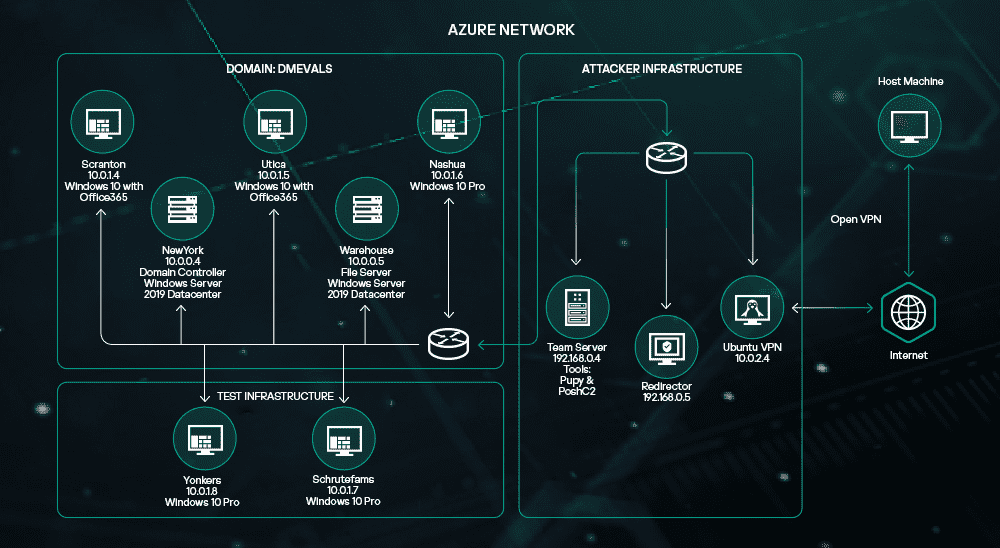

We were then invited to pitch our security product against the APT29 simulation. The test infrastructure was based on Microsoft Azure Cloud, and incorporated a domain controller, file server and several workstations. All this was controlled by our product Kaspersky EDR. We had two weeks to deploy - more than enough time to configure and test everything.

Two experts from Kaspersky went to the actual testing site at the MITRE offices, while two analysts from our Threat Research department took part remotely from our Moscow office, along with the Kaspersky Managed Protection team (our MSSP service). We later learned that some other vendors had up to five people working at the test site and as many as 15 others working remotely.

The testing process

Testing took place over three days. During that time, the full attack path is replicated step by step, from the start of the intelligence gathering stage to the moment when traces of the intruders' presence are cleared.

The setup was as follows. There was a large U-shaped table in the auditorium. We sat on one side – the defending Blue Team – connected to the infrastructure. At the other side of the table was the Red Team, the attacking team of MITRE experts. The observers sat in the middle.

During the simulation, the Red Team carried out malicious activities in an attempt to penetrate the infrastructure, gather data, etc. After each attack, we were told exactly what was done, on what host, what it achieved, and how it should be flagged. After that, we were expected to show how it reflected in our product. And so, step by step, the alerts were demonstrated and the observers recorded them. We did have time for lunch during testing but we were working flat out.

The first two days were all about attacks. On the third day we were told we could fine-tune our product configuration to modify our results. It should be noted that MITRE is very strict about adhering to the rules and ensuring a level playing field for all vendors. From the outset, it’s made clear that you must supply to the evaluation the same product that’s available to users. Although it’s possible to make changes before the test, all those changes must be noted so that they can be published alongside the results. The idea is that an independent expert can take the product ‘out of the box’, make the same changes and reproduce the test results.

On that third day, it’s also permitted to change the configuration after the main test, but this too has to be recorded. We modified our product settings – and took the test again. These additional test results will be marked in the evaluation report with a special Configuration Change Modifier (see our separate post about the evaluation parameters).

After the test is completed, there’s still time available to send more data – the test bench remains live for a while. This is in case, for example, the Blue Team didn’t see or didn’t show an alert that occurred during the simulation while the necessary data is still available in the product. This data can be retrieved from the deployed solution and sent to the MITRE specialists – with the aim of improving evaluation results.

What next?

Going through MITRE testing is a valuable experience. The Round 2 Evaluation proved a challenging confirmation of the capabilities of the product, and we're already using the experience we’ve gained in order to further improve our solutions.

We’ve already applied to participate in the next round of MITRE ATT&CK Evaluation, which promises to be especially interesting because of this year's rule changes. We plan to involve not only Threat Research experts but also representatives from our product teams in future tests.

You can read about the results of our participation in MITRE ATT&CK Evaluation and how to interpret those results, taking into account the features and restrictions of the test, at Kaspersky in MITRE ATT&CK.